Revolutionizing Apple Devices: The Impact of AI on iPhone, iPad & Mac in Autumn

Revolutionizing Apple Devices: The Impact of AI on iPhone, iPad & Mac in Autumn

Quick Links

- What is Apple Intelligence?

- Siri Gets Smart

- Apple Intelligence Speaks Your Language

- Generate Images Like Custom Emoji

- Take Actions Across Apps

- Personal Context is Everything

- Privacy is Built-In

- Integrated ChatGPT

- Apple Intelligence Compatibility

Key Takeaways

- Fall 2024 brings Apple Intelligence to iPhone, iPad, and Mac models with an A17 Pro or M1 processor and later.

- Expect smarter Siri interactions, text generation tools, image generation capabilities, and the ablity to control various system-wide actions with natural language commands.

- Apple prioritizes privacy with on-device processing and plans for future chip designs to enhance AI.

If you have an iPhone 15 Pro or an iPad or Mac with an M1 processor or better, Apple Intelligence could transform how you interact with your smartphone, tablet, and computer. Here’s what to expect ahead of the launch in fall 2024.

What is Apple Intelligence?

Apple Intelligence is Apple’s version of large language model (LLM) artificial intelligence. It’s a lot like existing tools like ChatGPT and Google Gemini , except it works solely within Apple’s ecosystem. Many of Apple’s existing tools and apps will receive support for Apple Intelligence when the feature launches.

Apple

These new AI features are set to arrive in the fall with the release of iOS 18, iPadOS 18, and macOS 15 Sequoia.

Siri Gets Smart

At the heart of Apple Intelligence is an improved version of Siri, the company’s digital assistant. Apple promises that Apple Intelligence will make Siri more personal, more natural, and better at surfacing relevant information. Siri is the interface through which users will primarily interact with the AI model.

The new and improved Siri can better understand spoken requests, including those where you stumble over your words. Context is maintained between requests, so you can do things like issue a follow-up command that follows the thread of your conversation. You might ask for the weather in one location, then follow up with a location-specific reminder for the same location without mentioning the place name again.

Apple

You’ll be able to issue more complex commands and Siri should be able to understand them. You might ask for Siri to find you photos taken with a specific person in a certain location, then edit the photo, and then send it to a contact. Context is maintained so Siri stays on the same page, every step of the way.

A new feature called type-to-Siri lets you issue commands to Siri without using your voice. Not only does this mean you can issue “silent” commands anywhere by simply typing, but it effectively turns Siri into a ChatGPT-like prompt interface.

Siri will also be able to understand what’s happening on your screen at any given moment. This will allow you to issue commands related to whatever information is on-screen. One example is adding an address to a contact card while chatting in a Messages window.

On the iPhone, Siri also gets a brand-new look. Instead of the usual Siri bubble that appears at the bottom of the page, activating Siri will now place a glowing border around the edge of the screen. Siri implementation on the Mac looks largely unchanged, with conversations taking place in the top-right corner of the screen.

Apple stressed that Siri improvements will be ongoing over the life of the next update cycle, so expect the assistant to get more intelligent with new updates to iOS 18, iPadOS 18, and macOS 15 Sonoma.

Apple Intelligence Speaks Your Language

Apple Intelligence allows you to generate and parse text in ways that should be useful right across the system, whether you’re on a phone, tablet, or computer. Apple has introduced a set of writing tools that allow you to highlight and then rewrite sections of text, adjusting for tone. You can generate new versions or revert to the original text at any time.

Context-aware replies let you generate responses based on the contents of a message or email. These appear in the Quick Type box on an iPhone , and provide prompts to gather information. You can tap a few boxes and Apple Intelligence will respond in kind.

In addition to rewriting and responding, a new proofreading tool shows grammar and spelling mistakes. You can move through each suggestion one by one, or accept everything with a tap or a click.

Apple

Apple Intelligence can analyze bodies of text and make decisions based on the context. This is applied to things like your email inbox with smart prioritization. , where you’ll see your most important messages at the top of your inbox. You’ll also get summaries at the top of long email chains, allowing you to quickly catch up on threads that you’ve missed out on.

You will also gain the ability to transcribe text from voice memos (taken directly in Notes, a new iOS 18 feature ) and through the Phone app on your iPhone.

Lastly, Apple Intelligence will sort your notifications based on importance. A new “Reduce Interruptions” toggle will only surface the most important notifications, like a smarter version of Time Sensitive notifications introduced in iOS 15 .

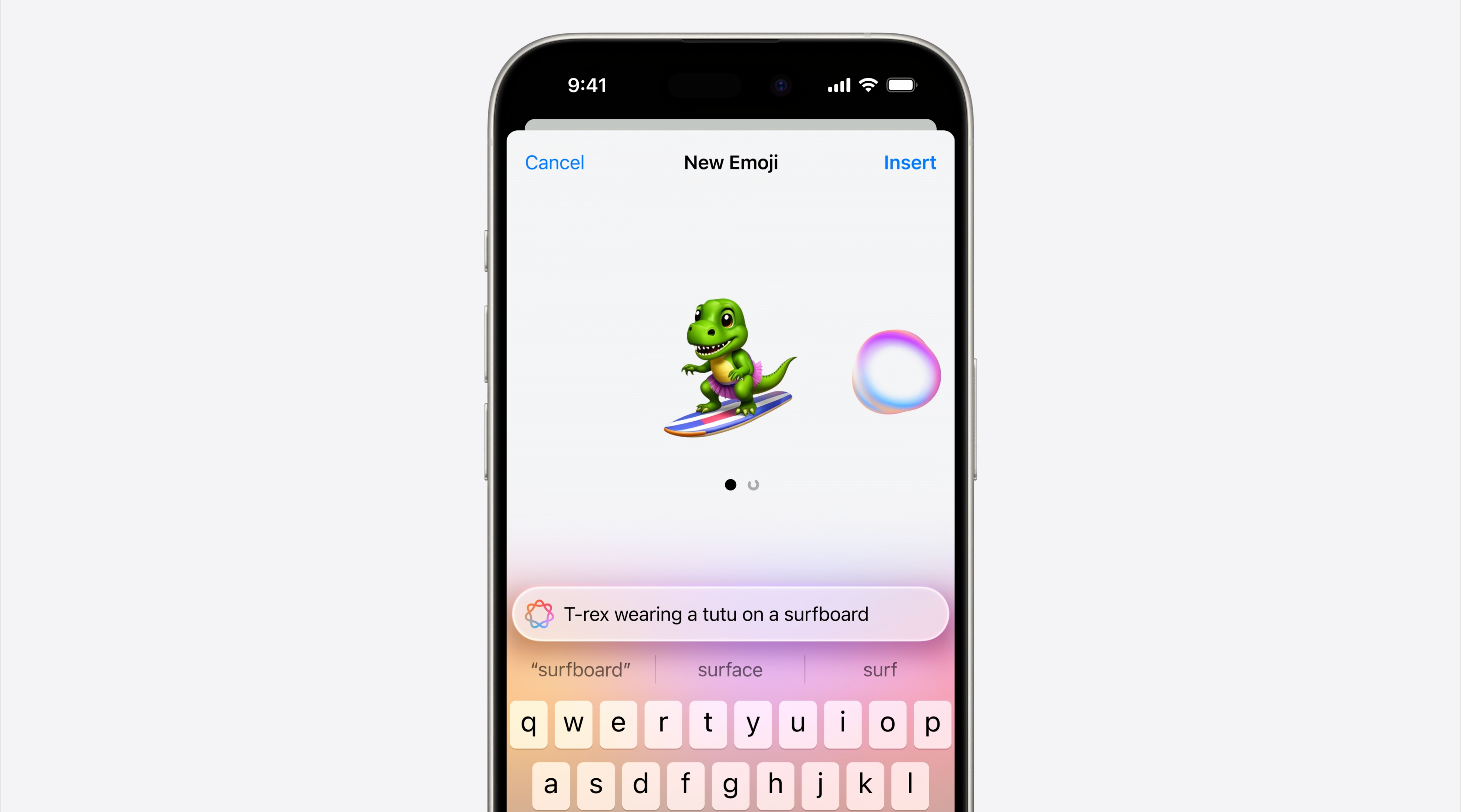

Generate Images Like Custom Emoji

Apple’s model also includes generative image capabilities, starting with Genmoji. Short for “generative emoji,” Apple Intelligence will create new emoji based on your prompts, with multiple options for you to sort through. You can then send them as tapbacks , stickers , or as in-line emoji in your messages. Apple also showed off a feature that generates emoji based on contact appearance.

Apple

Image Playground is Apple’s version of a generative AI image tool, like DALL-E and Midjourney. This tool will be available as a standalone app; will be integrated into apps like Messages, Freeform, and Pages; and can be added to third-party apps using Apple’s API.

It works by tagging themes, items, and accessories in a visual interface and generating images based on what you’ve selected. You can type manual prompts or add items from a list of suggestions, and select styles like sketch, illustration, and animation. Image generation takes place on your device.

An extension of Image Playground called Image Wand adds context-aware generation to other apps. By selecting the Image Wand from the list of tools that appears in the Notes app, you can generate images based on the context of your note or transform rough sketches into finished AI-generated artworks.

Apple Intelligence also brings prompt-based editing to Photos. Not only can you bark instructions at the app to perform standard edits like making an image warmer, but you can also use an image clean-up tool to remove unwanted items from images.

Apple

Apple is also making it easier to create memory movies from your Photos library. Rather than trawling for a list of relevant images manually, you can issue a prompt and Photos will select images and generate a movie for you.

It should come as no surprise that the images featured in Apple’s WWDC presentation had that generative AI “look” to them. This is par for the course when it comes to AI-generated images.

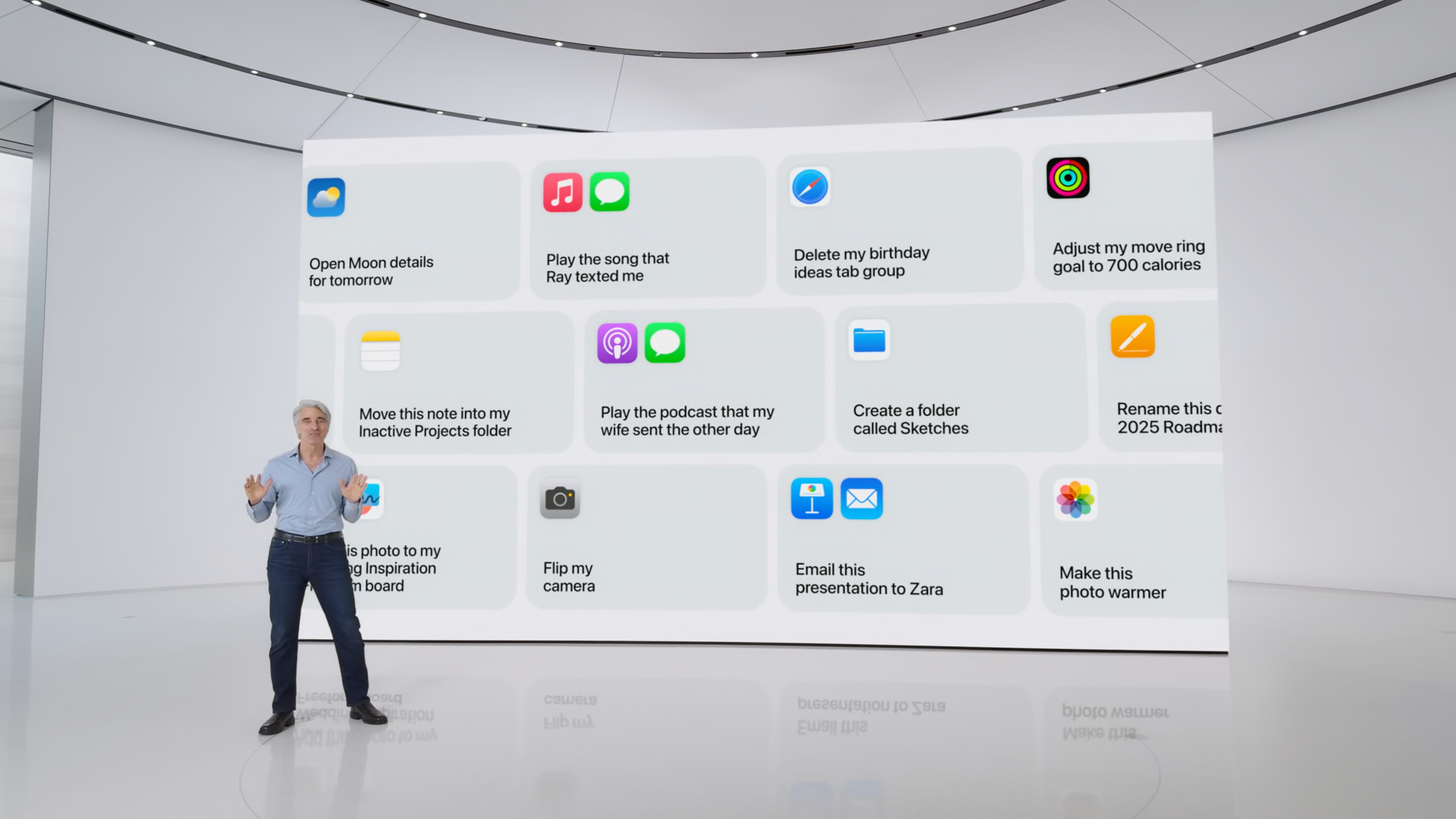

Take Actions Across Apps

Where Apple Intelligence starts to deviate from tools like ChatGPT is in its ability to perform actions. This is a unique position that only platform holders can take, and Apple appears to be wasting no time in bringing prompt-based actions to iOS, iPadOS, and macOS.

Apple gave plenty of examples during its WWDC reveal, from simple commands like “create a folder called New” and “flip my camera” to more advanced context-specific commands like “play the podcast my wife sent the other day.”

Apple

Some of the other examples that flashed past in the background included deleting specific tab sessions, finding and then applying edits to photos, adding items to notes, and playing music.

We’ll have to wait and see how this pans out, but based on Apple’s examples we’re excited to see just what is possible. The variety of commands was varied enough to get us excited about the potential. The ultimate goal here should be to build enough confidence in the user that they don’t hesitate to try and perform everyday tasks with Apple Intelligence.

If Apple can pull it off, this could be something special.

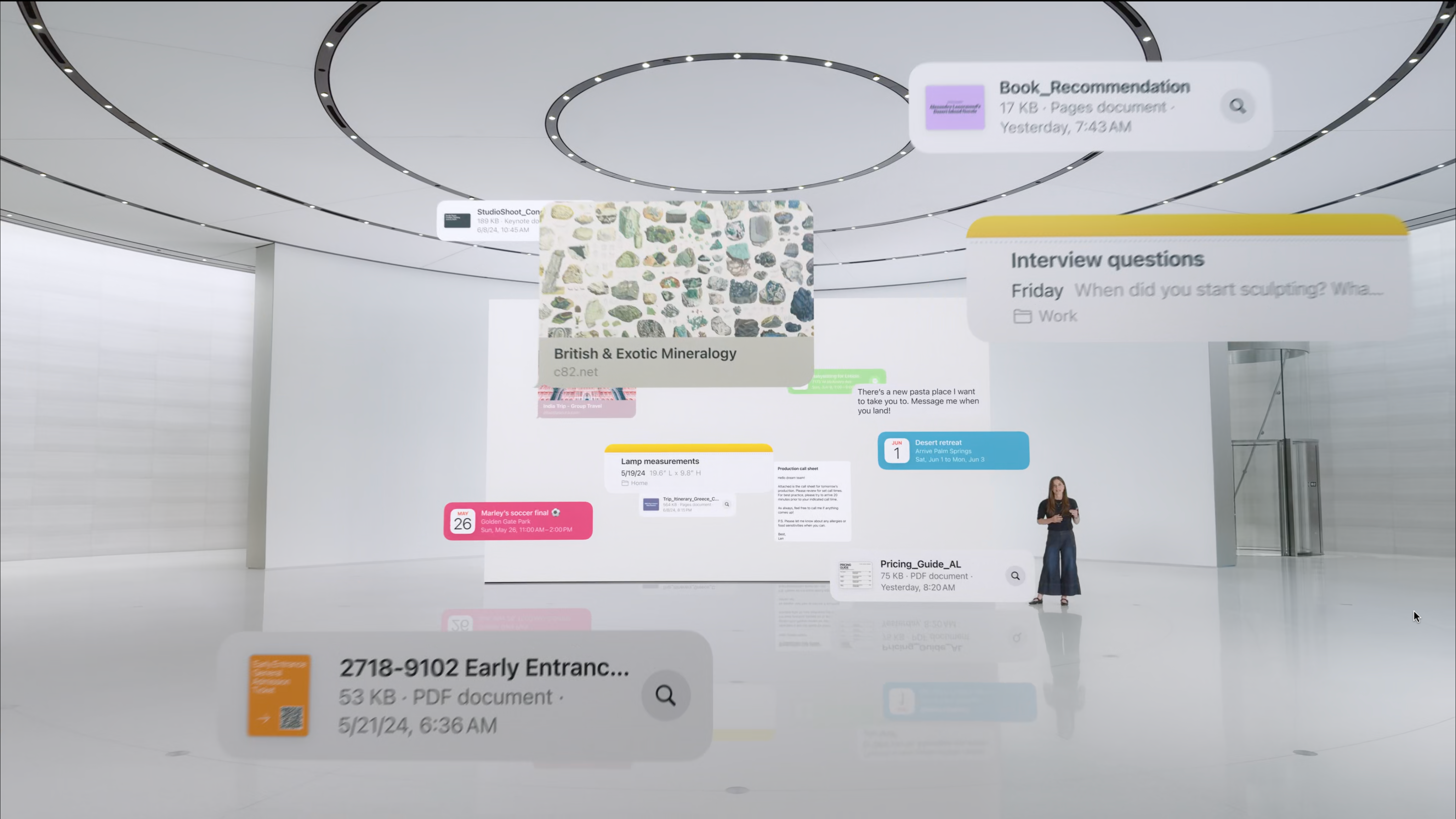

Personal Context is Everything

The real difference between Apple’s implementation and something like ChatGPT is that your iPhone (or iPad, or Mac) is at the core of the experience. Your device already understands a lot about you: the people you talk to, when you leave for work, the places you frequent, and so on.

By leveraging this context, these AI-powered operations become personal. They become a lot more useful, and a lot more relevant. If your iPhone understands who your boss is, when you speak to him, and what sort of tone is appropriate then the responses you generate in an email are a lot more useful.

Apple

Search is one area where this context can really come in handy. With Apple Intelligence you can ask Siri to find a link from someone you received last week, regardless of whether you can remember if it was an email or a message. You can surface information from conversations that you forgot to make a note of, like an address or what time you’re supposed to be meeting.

Siri can make use of all of the information on your device that the assistant already knows. Some examples include the contents of your inbox, the files you’ve recently saved, topics in message threads, reminders you’ve forgotten about, links you’ve shared, upcoming calendar events, passes you’ve added to the Wallet app, and so on.

Privacy is Built-In

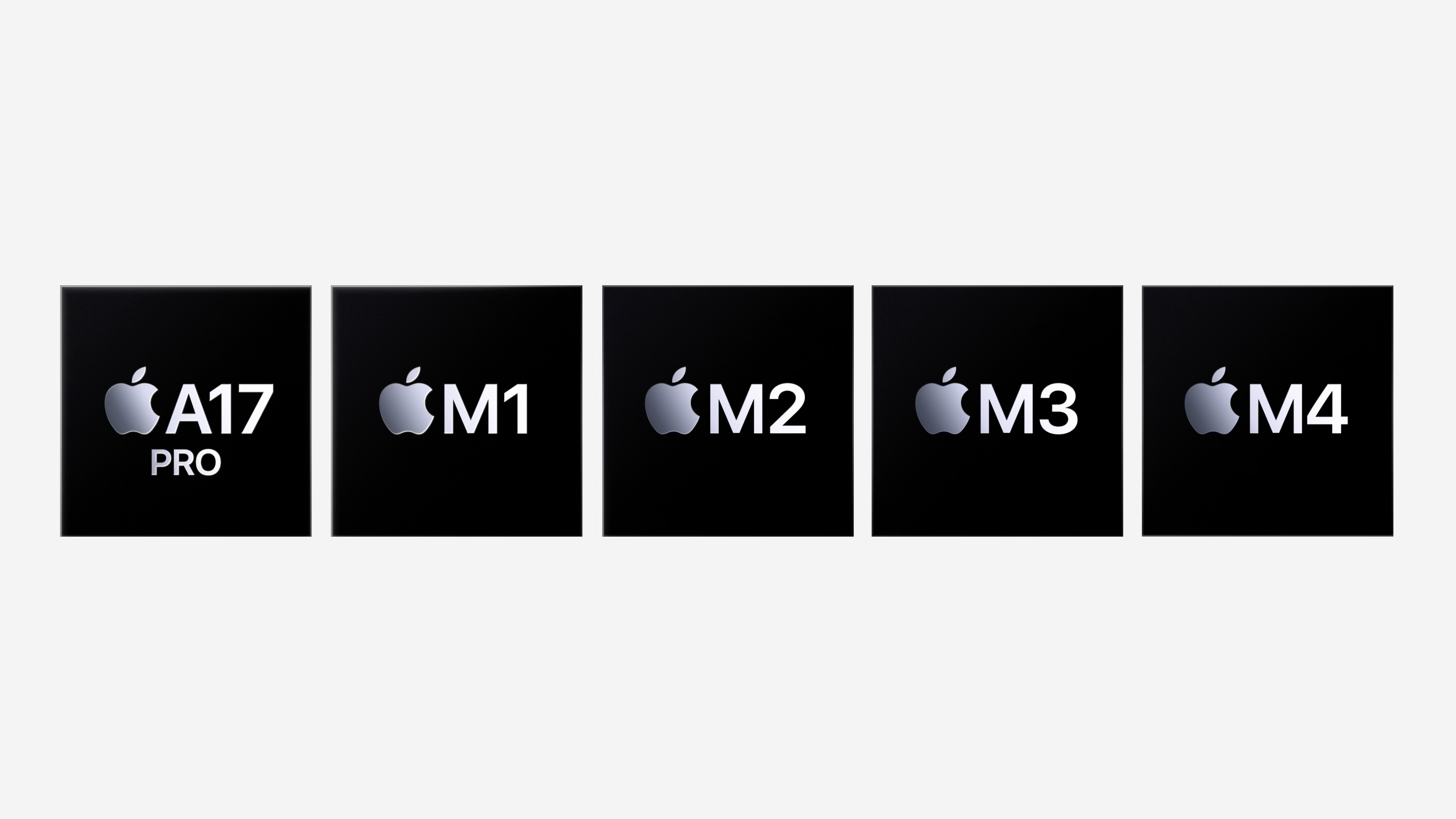

The other big deal with Apple’s implementation is the company’s focus on privacy. Apple has positioned itself as a company that goes out of its way to respect user privacy, and Apple Intelligence carries this torch forward. Many of these requests will take place entirely on-device.

For tasks that require more processing power, Apple’s Private Cloud Compute steps up. Your device will allegedly only send relevant data to Apple’s servers when necessary. This data is never stored and supposedly never accessible to Apple. These servers were built by Apple using its own Apple Silicon chips specifically for Apple Intelligence.

Apple

It remains to be seen just how many of these requests can be performed on-device. As devices become more powerful, it’s likely we’ll see the majority of these operations completed offline in the future. Apple is all-in on AI at this point, so expect future chip designs to prioritize Apple Intelligence capabilities.

Integrated ChatGPT

Siri will also have a window to ChatGPT 4o, arriving “later this year” according to the WWDC announcement. Apple claims that requests to ChatGPT won’t be logged, and you’ll even be able to connect your OpenAI account to access premium features if you’re a subscriber.

When Siri detects that ChatGPT may be able to help out, it will offer to send your request or image to the service. Once you’ve given your permission the request will be processed and you’ll get a response. You can even use GPT 4o within Apple’s Writing Tools to generate text, and make use of DALL-E to generate images.

ChatGPT integration will be free to all Apple Intelligence users.

Apple Intelligence Compatibility

Unfortunately, Apple Intelligence requires a modern Apple device like the iPhone 15 Pro (A17) or an iPad or Mac with an M1 processor. Both the iPhone 16 and iPhone 16 Pro are expected to be compatible with the service thanks to the upcoming A18 chip.

While Apple Intelligence might have been the biggest announcement of WWDC 2024, Apple also sent shockwaves around the world with the announcement of an iPadOS Calculator app .

Also read:

- [New] Step-By-Step Tutorial FB Video & Apple TV Combination

- [New] Supercharge Your Vehicles Top 10 SRT Mods for OS X & Windows

- [Updated] Prime Selection of Video Editing Software for TikTok (PCs)

- [Updated] Trending Decor Ideas for Livestreaming

- 2024 Approved Swap Periscope Leading Video Apps for iPhone/Android Devices

- 2024 Approved Understanding the Role and Impact of B Roll in Editing

- How To Fix A Black Screen Error On Discord While Doing a Screen Share

- In 2024, The Roadmap to Accessing Costless Frame Videos

- In 2024, The Ultimate Resource Guide for Refining On-Screen Text in Videos

- In 2024, Turn Popular TikTok Tracks Into Practical, Personalized Ringtones

- Streamlining Your YouTube Video Logging Process for 2024

- Ultimate Instructions for Configuring Your New Chromecast Device

- What Pokémon Evolve with A Dawn Stone For Xiaomi 14? | Dr.fone

- Will Pokémon Go Ban the Account if You Use PGSharp On Apple iPhone 14 Plus | Dr.fone

- WMVビデオをMP4形式に変換するためのVLCソフトウェアガイド

- Title: Revolutionizing Apple Devices: The Impact of AI on iPhone, iPad & Mac in Autumn

- Author: Mark

- Created at : 2024-12-19 23:10:28

- Updated at : 2024-12-25 00:48:08

- Link: https://some-guidance.techidaily.com/revolutionizing-apple-devices-the-impact-of-ai-on-iphone-ipad-and-mac-in-autumn/

- License: This work is licensed under CC BY-NC-SA 4.0.